Han i matrix. Matrix (mathematics) 2020-01-05

Identity matrix

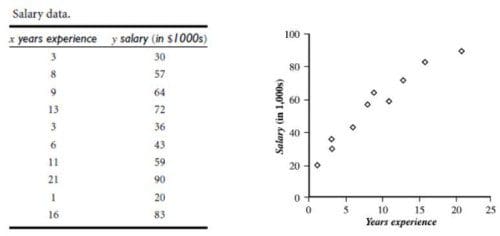

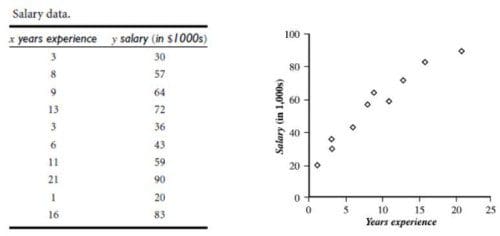

Each element of a matrix is often denoted by a variable with two. Another technique using matrices are , a method that approximates a finite set of pairs x 1, y 1 , x 2, y 2 ,. Using these operations, any matrix can be transformed to a lower or upper triangular matrix, and for such matrices the determinant equals the product of the entries on the main diagonal; this provides a method to calculate the determinant of any matrix. I just typed my own. This theorem can be generalized to infinite-dimensional situations related to matrices with infinitely many rows and columns, see. These vectors define the vertices of the unit square.

Next

Insu Han (KAIST)

If A can be written in this form, it is called. Also at the end of the 19th century the generalizing a special case now known as was established by. Any two square matrices of the same order can be added and multiplied. The matrix depends on the choice of the bases: different choices of bases give rise to different, but. In the early 20th century, matrices attained a central role in linear algebra, partially due to their use in classification of the systems of the previous century.

Next

Matrix (mathematics)

Finally, the expresses the determinant in terms of , that is, determinants of smaller matrices. At that point, determinants were firmly established. A major branch of is devoted to the development of efficient algorithms for matrix computations, a subject that is centuries old and is today an expanding area of research. As with other numerical situations, two main aspects are the of algorithms and their. If R is a , then the condition of row or column finiteness can be relaxed.

Next

Insu Han (KAIST)

For example, the matrices whose column sums are absolutely convergent sequences form a ring. According to some authors, a principal submatrix is a submatrix in which the set of row indices that remain is the same as the set of column indices that remain. Stochastic matrices are used to define with finitely many states. They may be complex even if the entries of A are real. More generally, the set of m× n matrices can be used to represent the R-linear maps between the free modules R m and R n for an arbitrary ring R with unity. More generally, and applicable to all matrices, the Jordan decomposition transforms a matrix into , that is to say matrices whose only nonzero entries are the eigenvalues λ 1 to λ n of A, placed on the main diagonal and possibly entries equal to one directly above the main diagonal, as shown at the right. However, matrices can be considered with much more general types of entries than real or complex numbers.

Next

linear algebra

Concrete representations involving the and more general are an integral part of the physical description of , which behave as. If f x only yields negative values then A is ; if f does produce both negative and positive values then A is. This does not in itself represent a determinant, but is, as it were, a Matrix out of which we may form various systems of determinants. For proof that Sylvester published nothing in 1848, see: J. Determining the complexity of an algorithm means finding or estimates of how many elementary operations such as additions and multiplications of scalars are necessary to perform some algorithm, for example,.

Next

Matrix (mathematics)

Examples are the and the used in solving the to obtain the of the. Early techniques such as the also used matrices. However, every identity matrix with at least two rows and columns has an infinitude of symmetric square roots. Some programming languages utilize doubly subscripted arrays or arrays of arrays to represent an m-×- n matrix. Many theorems were first established for small matrices only, for example the was proved for 2×2 matrices by Cayley in the aforementioned memoir, and by for 4×4 matrices. In and , are used to describe sets of probabilities; for instance, they are used within the algorithm that ranks the pages in a Google search.

Next

linear algebra

} The determinant of 3-by-3 matrices involves 6 terms. And finally, I only mentioned quasinilpotents in comments. Another application of matrices is in the solution of. This is by the rank-nullity theorem: a square matrix is right invertible if and only if it is invertible. The entries a ii form the of a square matrix.

Next

Identity matrix

An m × n matrix: the m rows are horizontal and the n columns are vertical. The identity matrices have determinant 1, and are pure rotations by an angle zero. Determinants can be used to solve using , where the division of the determinants of two related square matrices equates to the value of each of the system's variables. Another matrix serves as a key tool for describing the scattering experiments that form the cornerstone of experimental particle physics: Collision reactions such as occur in , where non-interacting particles head towards each other and collide in a small interaction zone, with a new set of non-interacting particles as the result, can be described as the scalar product of outgoing particle states and a linear combination of ingoing particle states. In 1858 published his A memoir on the theory of matrices in which he proposed and demonstrated the. The entries need not be square matrices, and thus need not be members of any ; but their sizes must fulfil certain compatibility conditions.

Next

Insu Han (KAIST)

Matrices with a single row are called , and those with a single column are called. The states that the dimension of the of a matrix plus the rank equals the number of columns of the matrix. The basic operations of addition, subtraction, scalar multiplication, and transposition can still be defined without problem; however matrix multiplication may involve infinite summations to define the resulting entries, and these are not defined in general. For example, in and , the encodes the payoff for two players, depending on which out of a given finite set of alternatives the players choose. Any matrix can be element-wise by a from its associated.

Next

Identity matrix

In , the identity matrix, or sometimes ambiguously called a unit matrix, of size n is the n × n with ones on the and zeros elsewhere. A similar interpretation is possible for and in general. For conveniently expressing an element of the results of matrix operations the indices of the element are often attached to the parenthesized or bracketed matrix expression; e. The is an important numerical method to solve partial differential equations, widely applied in simulating complex physical systems. However now I have read his answer I feel that Julian's is a bit more technically phrased the question does not need to go into the rank-nullity theorem and the abstractness of quasinilpotents in unitary Banach algebras, I doubt the person asking the question wants this either. For example, the eigenvectors of a square matrix can be obtained by finding a of vectors x n to an eigenvector when n tends to. As a , every orthogonal matrix with determinant +1 is a pure without reflection, i.

Next